When jumping into the AI world, there's a lot of talk about tokens and costs based on tokens. For someone new, it seemed like a good place to start. So... What exactly are tokens? Think of them like the individual pieces of text the model uses to process language.

What is a Token in one sentence? Tokens are the pieces of words or symbols that make up the content we give AI models, helping them understand and generate responses.

Tokens come in different styles:

- Full words

- Subwords (smaller parts of words)

- Characters

Let’s start with Word Tokens

Imagine a sentence: "AI is cool!" With word-based tokenization, it’d break down like this:

["AI", "is", "cool!"]

Simple, right? But models that rely only on whole-word tokens can hit limits fast, especially with languages that have complex word forms or rare words. So, to make things work smoother, let’s look at other token types.

Subword Tokens

With subword tokenization, instead of breaking down by words, we split based on commonly used word pieces. Picture it like this:

- “Understanding” might split into

[“Under”, “stand”, “ing”]

This approach is more efficient in handling unusual words because even if it doesn’t recognize "understanding," it can make sense of the sub-parts.

Character Tokens

The final approach, character tokens, splits by individual letters. You’d get:

["U", "n", "d", "e", "r", "s", "t", "a", "n", "d", "i", "n", "g"]

Why Tokens Matter

Tokens are the language models’ building blocks, which means every message, question, or answer you create is broken down into these smaller parts. It’s like using pieces of a puzzle rather than the full picture all at once—AI models can pick up on patterns better this way.

Token Limits and Model Size

Models have limits on how many tokens they can process at once, kind of like the amount of RAM you can use in one go. For example, if you’re limited to 4,000 tokens, any prompt over that gets cut off.

For instance, asking a model to analyze a novel paragraph-by-paragraph? You’ll want to keep an eye on token usage to avoid hitting a wall.

Token Cost: What It Means

Tokens also directly influence the cost in most AI systems. You’re paying by the number of tokens, not the number of words or sentences. So, if a long-winded prompt uses 500 tokens, trimming it down could save on costs.

Let’s say you’ve got a token limit and a long prompt. Here’s where things get practical: If you’re looking for speed and lower cost, shorter is better.

Here’s an example:

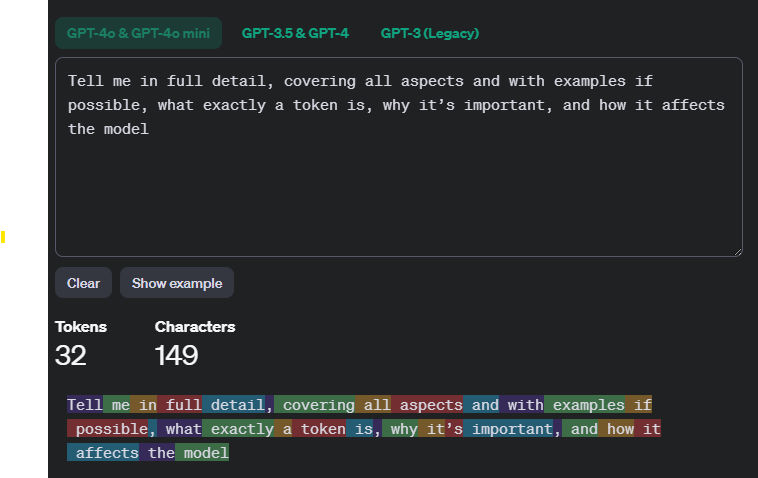

Imagine feeding in the prompt, "Explain what tokens are in a short sentence." A prompt like this might take 10 tokens or so, whereas "Tell me in full detail, covering all aspects and with examples if possible, what exactly a token is, why it’s important, and how it affects the model" could end up using 30+ tokens, costing you more.

Tokens are basically the secret sauce that keeps language models efficient, making it possible to break down complex language into bite-sized pieces.

Want to see how OpenAI calculates the tokens? Take a look at this site:

https://platform.openai.com/tokenizer

Log in to post comments